1. Overview of Spark Structured Streaming Triggers

2. Steps for Incremental Data Processing

3. Create Working Directory in HDFS

4. Logic to Upload GHArchive Files

5. Upload GHArchive Files to HDFS

6. Add new GHActivity JSON Files

7. Read JSON Data using Spark Structured streaming

8. Write in Parquet File Format

9. Analyze GHArchive Data in Parquet files using Spark

10. Add New GHActivity JSON files

11. Load Data Incrementally to Target Table

12. Validate Incremental Load

13. Add New GHActivity JSON files

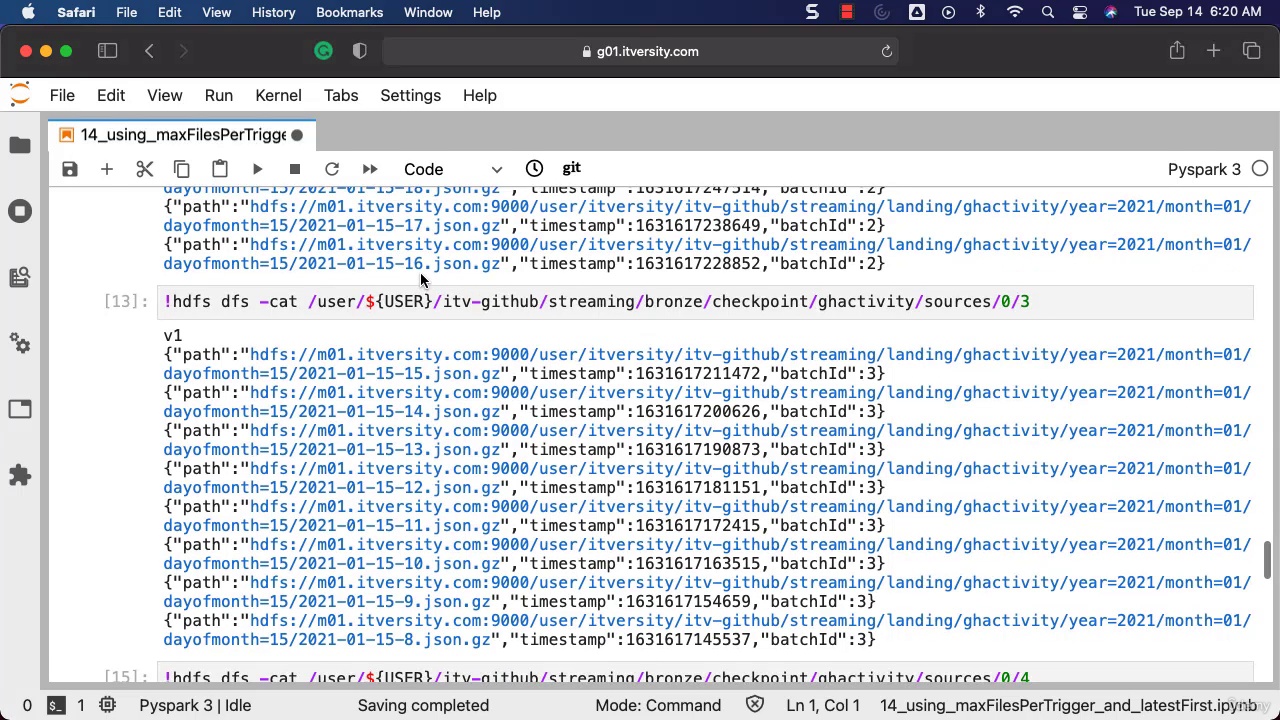

14. Using maxFilerPerTrigger and latestFirst

15. Validate Incremental Load

16. Add New GHActivity JSON files

17. Incremental Load using Archival Process

18. Validate Incremental Load